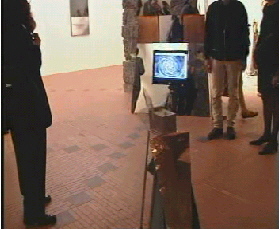

InfoMus Lab

Interactive installation for the exhibition "Arti Visive 2"

Palazzo Ducale, Genova, October 1998

|

|

Aims

Exploring new forms of interaction involving music, gesture, robot, visual languages.

Experimenting new interactive multimedia technologies developed in our lab

on Kansei or Technology of the Emotion, in collaboration with the Hashimoto Laboratory at Waseda University of Tokyo.

Description

A robot on wheels embedding sensors and a videocamera freely tours around the museum exhibit, as a particular visitor.

It is a sort of interface between humans and the machines which co-habit in the

exhibition area. Sensors allow it to avoid collisions with visitors, but also to

observe their behavior and to interact with them, and to observe the museum artworks.

Interaction with the visitors

Visitors can communicate with the robot by moving around it, showing themselves and acting in front of its "eyes," obstacle its navigation, following it, ignoreing it etc. Some stimuli are interpreted by the robot as positive (e.g., following it without obstacle it, that is demonstrating interest for what it does), others are negative (e.g., block it forcing it to stop, ignore it while you're passing next it).

A computational model of artificial emotions controls the "emotional state" or "mood"

of the robot on the base of these stimuli.

Emotional state is shown in different ways and modalities: visual media (on computer screens around the exhibit), the real-time synthesis and processing of sound and music signals (e.g., spatialisation), way of moving (slow and soft, nervous and quick, tailwagging, etc.). When the robot meets a visitor, it shows its emotional state by increasing its music intensity, and shows the state

of the "emotional mirror" and moves itself according to its style.

The "emotional mirror"

The visual component is based on the idea of "emotional mirror": the machine has a videocamera used to capture, for example, the face of a person, an artwork. This is sent (by a wireless radio link) and shown in the video screens. Such images are shown distorted according to the emotional processes in the robot. For example, the face of a person will appear (on the video screens in the installation) deformed in a vortex or

colorised, depending on robot's emotional filter.

The music

The emotional processes are used to morph "virtual mirrors" and to influence a compositive software engine working in real time. Music is generated in real time

with an evolution linked to the emotional processes.

Realization

The robot is provided with on board sensors and of a camera. It communicates by

a radio link with a computer running the supervision software controlling the artificial emotions module, and the music, video, way of moving modules. Further, the robot

has an on-board amplifier and loudspeaker system connected by a radio link, which integrate the environment audio equipment.

Three computers connected in a local network control in real time the different behaviors and tasks of the robot (movement, "emotional mirrors," sound and music).